In this blog, we will learn about Distributed Shared Memory, why we use it, and what are the advantages and disadvantages of Distributed Shared Memory. So let’s get started with the blog.

Table of Contents

- Introduction to Distributed Shared Memory

- The architecture of Distributed Shared Memory

- Advantages

- Disadvantages

- Types of Distributed Shared Memory

- Conclusion

- FAQ

Introduction to Distributed Shared Memory

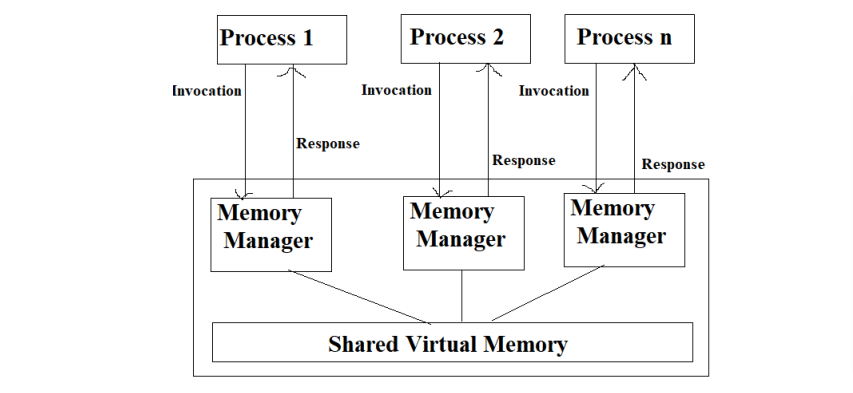

DSM stands for Distributed Shared Memory. Distributed shared memory (DSM) is a type of parallel computing architecture that allows multiple processors in a network to access the same memory space as if they were working on a single shared memory system. This approach enables applications to be parallelized, which is essential for achieving high-performance computing (HPC) in modern computing environments.

In a DSM system, the memory is physically distributed across multiple nodes in a network. Each node has its own memory cache, which is logically combined to create a single, shared address space.

When a processor accesses a memory location, it sends a message to the node responsible for that location, which retrieves the data from its local cache and sends it back to the requesting processor. The DSM system ensures that all nodes see a consistent view of the shared memory, despite the fact that each node maintains its own cache.

Although it lacks physically shared memory, distributed shared memory (DSM) implements the shared memory model in distributed systems. The shared memory model’s virtual address space is shared by all nodes.

The architecture of Distributed Shared Memory

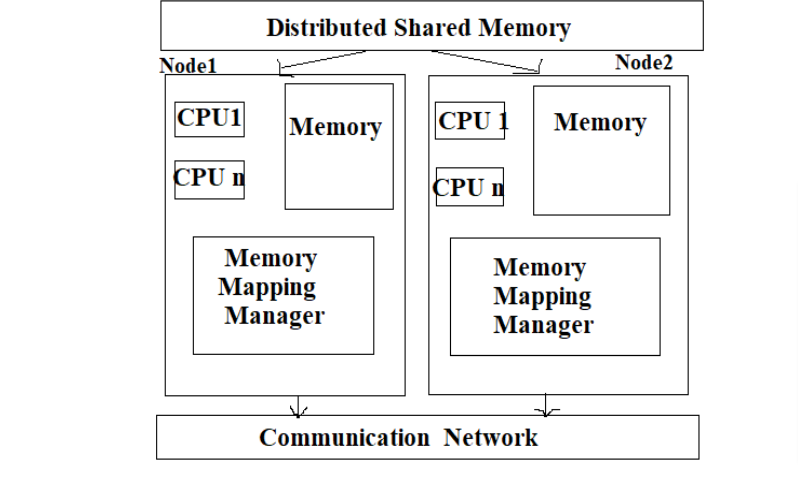

One or more CPUs and memory units make up each system node. A high-speed communication network links the nodes. Basic messaging system for information transmission between nodes. A portion of the shared memory area is cached in the main memory of each node.

A) Memory Mapping Manager Unit

In a distributed shared memory (DSM) system, the Memory Mapping Unit (MMU) Manager is responsible for managing the mapping of physical memory addresses to virtual addresses across multiple nodes in the distributed system.

The MMU Manager maintains a mapping table that records the mappings of virtual addresses to physical addresses on each node. When a node needs to access a virtual address, it first consults the mapping table to determine the physical address to which the virtual address is mapped.

The MMU Manager also handles memory allocation and deallocation across multiple nodes in the DSM system. When a node requests a block of memory, the MMU Manager checks the mapping table to determine if there is enough available physical memory to fulfill the request. If there is, the MMU Manager updates the mapping table to reflect the allocation.

Similarly, when a node releases a block of memory, the MMU Manager updates the mapping table to reflect the deallocation.

In addition, the MMU Manager is responsible for maintaining coherence across the distributed memory system. It ensures that all nodes in the system have consistent views of the shared memory and that any updates made by one node are propagated to all other nodes.

Overall, the MMU Manager plays a critical role in the efficient and effective management of distributed shared memory in a multi-node system.

B) Communication Network Unit

In a distributed shared memory (DSM) system, the Communication Network Unit (CNU) is responsible for managing the communication between nodes in the distributed system.

The CNU acts as a bridge between the MMU Manager and the network interface on each node. It is responsible for transmitting and receiving messages between nodes, such as requests for memory access or updates to shared memory.

The CNU also handles the routing of messages between nodes. It uses a variety of techniques, such as multicast or broadcast, to efficiently transmit messages to multiple nodes simultaneously.

In addition, the CNU manages the flow control and congestion control of messages in the network. It ensures that the network does not become congested and that messages are delivered in a timely and reliable manner.

The CNU may also implement various optimizations to improve the performance of the DSM system. For example, it may use caching techniques to reduce the number of messages that need to be transmitted over the network.

Overall, the Communication Network Unit is a critical component of a distributed shared memory system, as it enables efficient and reliable communication between nodes in the system.

Advantages

Some advantages of DSM include:

- Shared memory abstraction: DSM allows multiple nodes to share a common memory space, providing a unified view of memory to all processes in the system. This abstraction simplifies programming, as processes can interact with shared memory using standard memory operations like read and write.

- Scalability: DSM provides a way to scale a shared memory system beyond the capacity of a single node. By distributing memory across multiple nodes, DSM can increase the total available memory in the system.

- Performance: DSM can offer faster access to shared memory than traditional message-passing systems, as memory access is typically faster than network communication. DSM also avoids the overhead associated with message-passing systems, such as message copying and serialization.

- Ease of programming: DSM can simplify programming by providing a uniform memory abstraction across nodes. This abstraction allows developers to write parallel programs using familiar shared memory programming models like threads and locks.

- Transparency: DSM can provide a transparent view of memory to processes, meaning that processes do not need to know the physical location of memory or how it is accessed. This transparency allows applications to be developed and tested on a single node, then easily deployed to a distributed environment.

Disadvantages

Some disadvantages of using DSM (Distributed Shared Memory), include:

- Scalability: DSM systems can become less efficient as the number of nodes in the system increases. This is because the amount of memory required to store the shared memory grows with the number of nodes.

- Consistency: Maintaining consistency of the shared memory across multiple nodes can be difficult. If different nodes are accessing and modifying the same memory locations at the same time, it can lead to inconsistencies and race conditions.

- Latency: Accessing shared memory in a distributed system can be slower than accessing local memory due to network latency and communication overhead.

- Complexity: DSM systems can be complex to implement and maintain. They require specialized hardware and software, and programming for DSM can be challenging.

- Fault tolerance: DSM systems can be vulnerable to failures. If one node fails or becomes unavailable, it can affect the entire system and cause inconsistencies in the shared memory.

Overall, while DSM can be useful in certain contexts, it may not always be the best choice for distributed systems. Other architectures, such as message-passing or peer-to-peer systems, may be more appropriate depending on the specific requirements of the application.

Types of Distributed Shared Memory

There are four types of distributed shared memory present in the Distributed System which is as follows:

1) On-Chip Memory

On-chip memory can be used as a type of distributed shared memory (DSM) in a multi-core or multi-processor system. On-chip memory refers to memory that is physically located on the same chip as the processor, rather than in external memory modules.

Advantages:

- Lower latency: Accessing on-chip memory is generally faster than accessing external memory due to the shorter distances involved.

- Lower power consumption: Since accessing on-chip memory requires less energy than accessing external memory, using on-chip memory as a DSM can help reduce power consumption in a multi-core or multi-processor system.

Disadvantages:

- Limited capacity: On-chip memory is typically limited in capacity compared to external memory, so it may not be suitable for storing large amounts of shared data.

- Limited scalability: Using on-chip memory as a DSM may not scale well for systems with a large number of processors, as the amount of on-chip memory required to maintain a shared memory address space grows with the number of processors.

Applications:

On-chip memory DSM is complex and expensive so that’s why they are widely used in appliances cars and even toys.

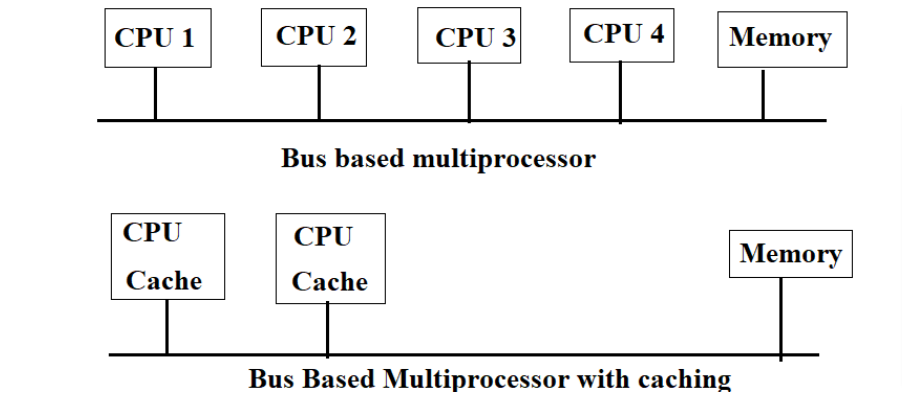

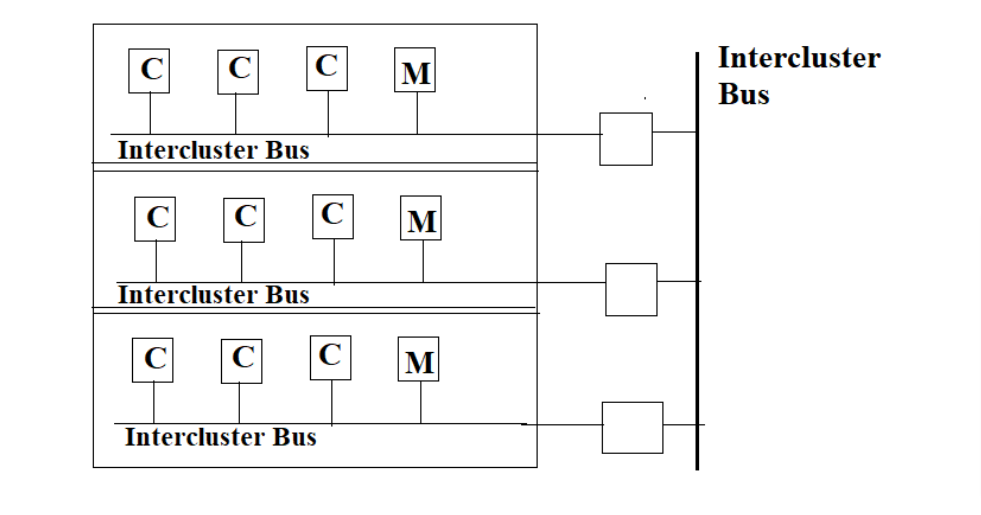

2) Bus-Based Multiprocessors

A bus-based multiprocessor in distributed shared memory (DSM) is a type of computer architecture that consists of multiple processors connected to a shared bus that provides access to a distributed shared memory system. In this architecture, each processor has its own local cache memory, and the shared memory is distributed across these caches.

The bus is responsible for coordinating communication between processors and memory, and it can be a bottleneck in the system if the number of processors increases beyond a certain point. Therefore, bus-based multiprocessors are typically limited to a relatively small number of processors.

Caches for each CPU are used to lessen network traffic. To stop two CPUs from trying to access the same memory at the same time, certain algorithms are utilized. It is overloaded because just one bus is used.

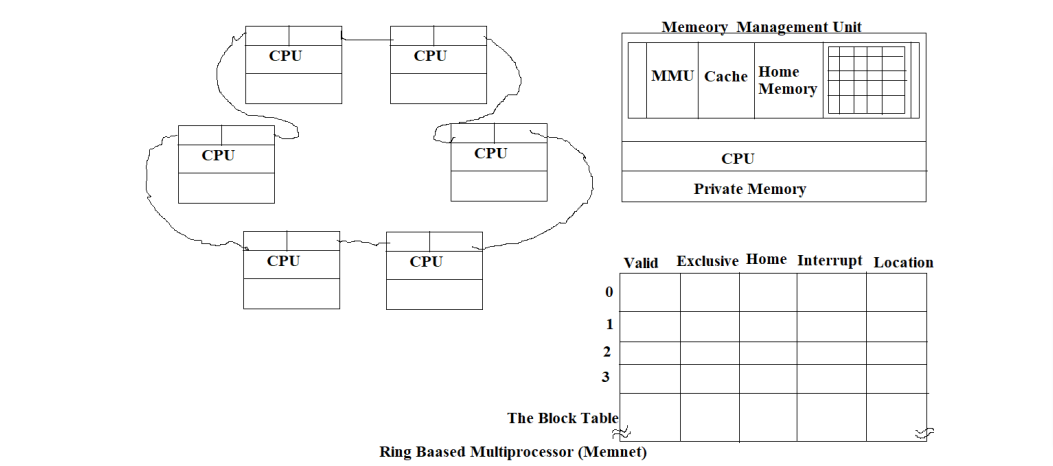

3) Ring-Based Multiprocessors

A ring-based multiprocessor is a type of parallel computer architecture that consists of a set of processors connected in a ring topology. In this topology, each processor is connected to its neighbors in a circular manner, forming a closed loop. The communication between processors is achieved by passing messages along the ring.

In a distributed shared memory (DSM) system, multiple processors access a shared memory space. This memory space is physically distributed across multiple nodes, and each node has its own memory. The processors communicate with each other by reading and writing in this shared memory space.

In a ring-based multiprocessor DSM system, each processor is connected to its neighbors in the ring, and they can access the shared memory space by sending messages around the ring. This means that a processor can read or write to a memory location on another processor’s node by sending a message around the ring to that node.

4) Switch Multiprocessor

A switched multiprocessor in distributed shared memory (DSM) is a type of computer architecture that combines elements of both shared memory and distributed memory systems.

In a switched multiprocessor DSM, multiple processors are connected through a high-speed switch or network, allowing them to share a common address space and access data stored in shared memory. Each processor has its own local memory, but the shared memory is accessible to all processors in the system.

When a processor needs to access a particular portion of shared memory, it sends a request to the switch, which then routes the request to the appropriate memory module. The memory module responds to the request and sends the requested data back to the processor.

Switched multiprocessor DSMs can offer high performance and scalability since they allow multiple processors to work on a shared set of data. However, they also have some disadvantages, such as increased complexity and the potential for contention and congestion on the shared memory bus or network.

Conclusion

End-user programs can access shared data without utilizing inter-process connections thanks to distributed shared memory. Inter-process communication will be transparent to the end user thanks to the DSM system.

Variables may be transferred immediately and the cost of transmission is unnoticeable when utilizing DSM.

FAQ

DSM stands for Distributed Shared Memory. Distributed shared memory (DSM) is a type of parallel computing architecture that allows multiple processors in a network to access the same memory space as if they were working on a single shared memory system.

This approach enables applications to be parallelized, which is essential for achieving high-performance computing (HPC) in modern computing environments.

1) On-Chip Microprocessor

2) Bus Based Multiprocessor

3) Ring Based Multiprocessor

4) Switch Multiprocessor

End-user programs can access shared data without utilizing inter-process connections thanks to distributed shared memory. Inter-process communication will be transparent to the end user thanks to the DSM system.